Creating Chessort: The Chess Puzzle Sorting Game

Introduction

I've recently been enjoying playing chess, especially chess puzzles. During some of my games and puzzles, I often found the top move but failed to gauge the strength of the second-best and third-best moves. So, I searched for a game that would allow me to sort moves based on their strength but couldn't find anything. That's when I decided to create such a game myself.

Planning

The idea is simple. Given a chess position and a certain number of legal moves, sort them from strongest to weakest and then check to see if you are correct. For this idea, we'd need data that provides a board position (by its FEN) and also some legal moves you can sort. A backend server to serve this data to us from a database. A frontend to display the app and allow us to play.

Data Preparation

It would be difficult and time-consuming for us to manually create positions and legal moves for us to play. Even randomising this is a poor choice. Instead, we are privileged to use the Lichess.org Open Database. The puzzle database provides us with 4,062,423 interesting positions that have been pre-generated! Furthermore, we get some useful metrics such as Rating Deviation, Popularity, and Number of times Played. We can use these metrics to further filter down the results into picking tried and tested top-notch positions.

The decision was made to use 4 moves in the game as an interesting number of moves to sort as 2-3 is not challenging or interesting and 5 is a little too many. We also needed to have at least 4 legal moves per position for us to be able to create a game. However, if we had more legal moves we could make more games since we could pick any 4 distinct moves from our move set. We decided we wanted the top 50 moves for any given position. We don't really care about the worst moves beyond 50+ as they're not really interesting for us to sort.

The implication of these decisions means that for any single position, we would have an enormous number of games we could generate. Using the combination formula, we could generate C(50, 4) = 230,300 distinct sorting games from a single puzzle! This means that we would not have to process all that many puzzles from the Lichess open database to have a huge pool of games ourselves. Now of course, not every game is interesting. Sorting the 33rd, 34th, 37th, and 42nd moves is a bit random, not fun and pointless, so we would rely on a good game generation strategy (more on this later).

The puzzle data also did not include anything except the top best move sequence for each puzzle. This meant that we would have to generate this ourselves. We decided to use Stockfish, a strong open-source chess engine, for the simple reason that it combines my two greatest loves (stocks and fish). Stockfish would help us analyse each position we had and generate up to 50 top legal moves for it and their engine evaluation (how strong the move is if played).

Stockfish is wildly configurable. Tweaking its parameters could result in different output for legal moves. We would pre-generate the data for our game as real-time generation is much too slow. One consideration made is we may want to regenerate the data at a future time to tweak parameters. We wanted to do this in a controllable way so it would be easy to overwrite rows in our database, etc. Our approach was to transform our input Lichess data into Chessort chunked data. Each chunk represents a certain number of processed lines from the Lichess input CSV data. We decided to use chunk sizes of 1000. This way, regardless of the number of filtered lines and parameters, each chunk always represented the same number of input lines.This is what the metadata for an example chunk looks like:

{

"stockfishVersion": "16.1",

"offset": 1700000,

"limit": 1000,

"evaluationDepth": 25,

"multipv": 50,

"minimumMovesRequired": 4,

"minPopularityRequired": 90,

"minNumberPlaysRequired": 100,

"maxRatingDeviation": 100,

"inputLichessFileSha256": "a480b5c25389d653800889bcf223d32a622249bd3d6ba3e210b8c75bc8092300",

"outputFileSha256": "a32d9fa120f36b32b9df1be018794af9c3384ca32d1c1305dc037fd6e53c1afb"

}Notes:

- We use an evaluation depth of 25 for an adequately accurate result suitable for analysis games. Anything in the range of 20-25 is suitable here.

- Each 1000-sized chunk takes roughly 70 minutes to produce on my machine (running 24 threads, 64GB RAM on a Ryzen 3900X).

- SHA256 hashes are stored as metadata to ensure reproducibility.

- 90+ popularity, 100+ games played, and a max rating deviation of 100 were found to produce super interesting games.

Database

Our database is a simple MariaDB cluster that I already run to power various projects. We use an ingestion script to process our Chessort CSV data and ingest it into the database.

The only thing of note here is we convert the relative engine evaluations into global evaluations during the ingestion process. For example, if our input CSV had #1 as the evaluation and it was Black's turn to play, we'd store this as #-1 instead. So each evaluation metric represents the global evaluation in our database.

Backend (Server)

Flask was used to power the backend server. The server is responsible for generating games, serving them, and validating solutions.

Two interesting problems arose in this space:

- Game generation.

- Difficulty estimation for humans.

Game Generation

Generating games is a key part of the project. Let's say we have 1 position and 50 moves. If we simply picked any 4 distinct moves, this could create a boring game. Boring games are those where all the moves are extremely terrible since it is not useful in chess to analyse only simply awful moves. In chess, the goal is to constantly find the best move. Example evaluations: -1234, -1322, -1832, -2011.

There are also extremely difficult games which are impossible for humans to solve. Consider these evaluations: +2, +1, -2, -4. Not even the best chess players would be able to tell apart these four moves that are all extremely neutral in strength.

Therefore, to create a fun game, we need a nice variance between the moves selected. We should pick some strong moves and also some weaker ones. Some moves that are good for the turn player, and some that are bad, etc. Of course, we can tweak the selection parameters to generate games of varying difficulty.

We could make this extremely easy game: #1, +500, -500, -1 where most players should find both mates and then be able to sort the remaining 2 moves based on which is better for the turn player.

To tackle game generation, we create a generic interface MoveSelectionStrategy which looks like this:

class MoveSelectionStrategy:

def select_moves(self, moves: list[Move], num_required_moves: int) -> list[Move]:

raise NotImplementedError("This method should be implemented by subclasses.")

def can_handle(self, moves: list[Move], num_required_moves: int) -> bool:

if len(moves) == 0 or num_required_moves <= 0:

return False

if num_required_moves > len(moves):

return False

return TrueNow we can create many different strategies which accept a set of moves and produce the number of required moves as output. Each strategy has a can_handle method to determine if it will be able to produce a valid output and a select_moves method to produce the output.

The strategies can also make use of a helper SmartBucket class which sorts each move into distinct buckets based on its evaluations. This is useful because it's often undesirable to pick 2 moves of equal strength as they are correct in multiple positions when sorting. However, we are free to do this if we intentionally want to create easier games.

As of writing, there are two strategies being used:

- Top Spread Strategy: Picks the top move and then the next 3 top move with a minimum normalised evaluation strength spread allied.

- Random Generation Strategy: Used as a fallback in case all other strategies fail (this strategy is guaranteed to pass).

Difficulty Estimation for Humans

Another interesting problem is assigning a difficulty to a game. Given a position and a set of moves, how can you gauge how difficult it will be for a human to sort these moves?

Naturally, we assume humans are able to sort things when there is a clear difference between them. In chess, humans should be able to tell apart a mate in 1 (#1) from a mate in 2 (#-2) but maybe not +450 from +420. Therefore, the variance between evaluation strengths is the determining factor.

In general, this is how difficult it is to compare different types of moves for a human, from easiest to hardest:

- Mate and centipawn (e.g.,

#1and+200) - Mate and another mate (same side) (e.g.,

#1and#4) - Centipawn and another centipawn (e.g.,

+400,+50)

After some collaboration and guidance from various members of the Lichess and Stockfish developer communities on Discord, I was able to formulate a solution. Please note this solution is a work in progress and by no means perfect (it will iterate over time).

Solution Overview

The solution involves mapping mate values to a [0, 0.5] range. We do this by passing the mate value (like 1, 2, 3, etc.) into a sigmoid function. A sigmoid function takes its input from [∞, -∞] and normalises it into a [1, 0] range. The function is exponential in nature, so values in the middle (0.5) are more varied compared to values at each boundary (1 and 0). Once we have the mate values in a [0, 0.5] range for both white and black, we offset these values so that white mate values fall into a [1.5, 0.5] range and black values fall into a [0, -0.5] range.

For centipawn values, we also pass them through a sigmoid function. This maps all centipawn values (positive or negative) into a [1, 0] range.

- Mate moves get exponentially closer the further they are from mate in 1 (

#1). - Centipawn moves get exponentially closer the further they are from neutral (

0).

We put these lists together so that mates for white come first, then centipawn values, and finally mates for black. This list is then normalised using linear scaling into a [1, 0] range. This gives us a normalised strength mapping for each move.

Difficulty Comparison

To compare how difficult it is for a human to sort these values, we find the adjacent differences (diffs) between each value. The larger the adjacent diff, the easier it is to sort (because the strengths are further apart). There is a special case for adjacent diff values of 0. A value of 0 indicates the same exact strength and is excluded (because in our game, if two moves are the same strength, we accept any ordering, which makes the game significantly easier).

Finally, we take the adjacent diffs and compute their harmonic mean. We use a harmonic mean here (over a regular mean) so that smaller values greatly influence the final difficulty. This is because even if one pair of move comparisons is hard and the rest are easy, the overall difficulty is still hard (to sort all adjacent pairs).

Final Difficulty Mapping

If we then take this result and put it into a [0, 100] range, we get a numerical value that represents difficulty.

It's important to note:

- A centipawn to centipawn comparison will be bound to a [50, 100] output.

- A centipawn to mate comparison will be bound to a [25, 100] output.

- A mate to mate (same side) comparison will be bound to a [75, 100] output.

- A

#1and-#1comparison produces the smallest output of 0.

Therefore, we need to group the final difficulty number into exponential bounds like so:

def map_difficulty(difficulty):

if not (0 <= difficulty <= 100):

raise ValueError("Difficulty must be within the range of 0 to 100.")

if difficulty < 50:

return Difficulty.BEGINNER

elif 50 <= difficulty < 75:

return Difficulty.EASY

elif 75 <= difficulty < 87.5:

return Difficulty.MEDIUM

elif 87.5 <= difficulty < 95:

return Difficulty.HARD

else:

return Difficulty.MASTERThis mapping helps classify the difficulty level of the game for human players, ensuring a balanced and engaging experience.

Frontend (App)

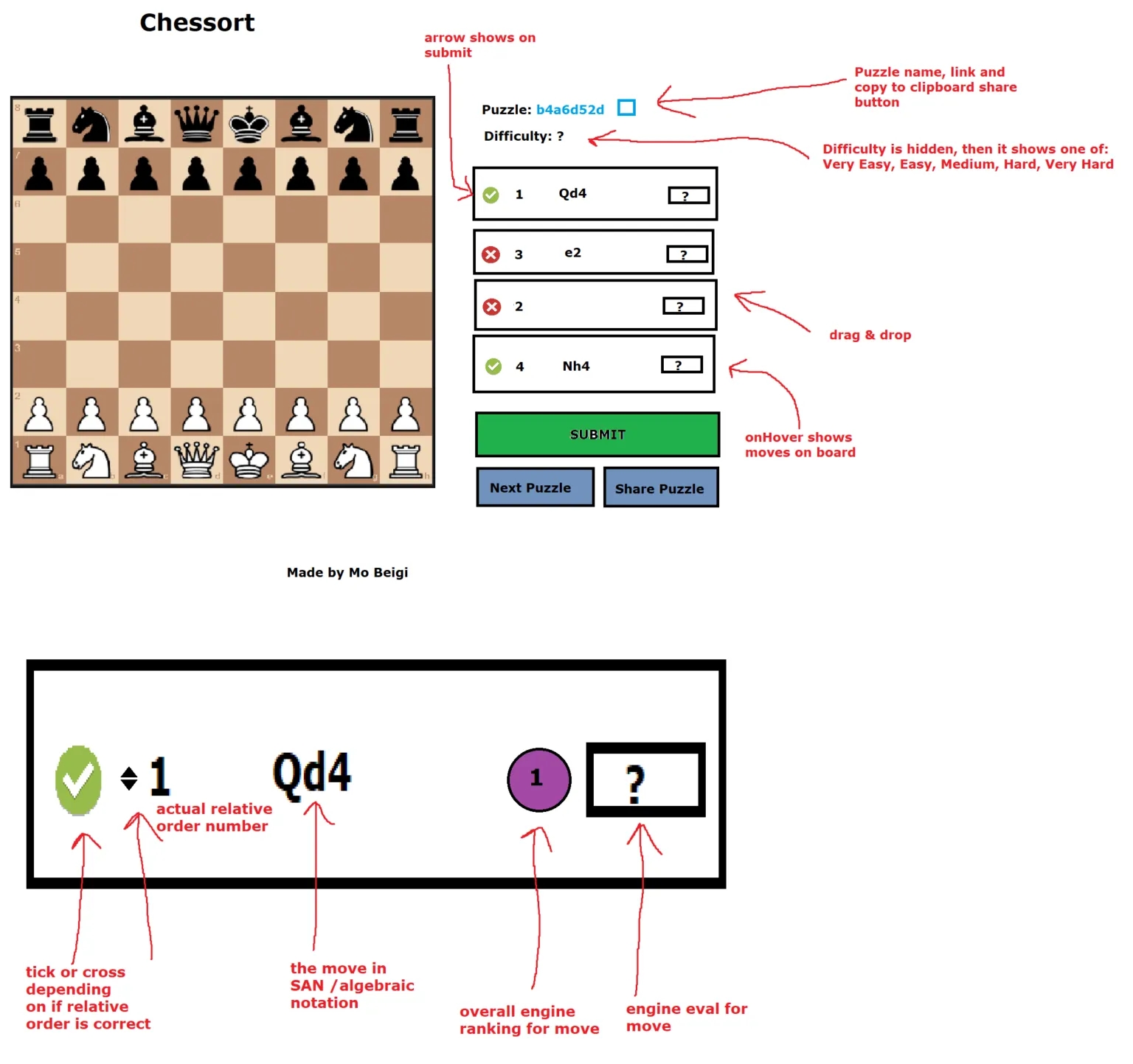

Finally, we need an juicy frontend to play the game. After some deep thought, I came up with the following brilliant mock-up:

As we all know, Paint is the industry leader for design mock-ups. We also know my design skills are unparalleled, which led to this beautiful masterpiece. Look at the subtle chessboard colouring, the tasteful misalignment of the cards. Oh my god, it even has red arrows.

Anyway, our design is simple: a responsive two-column design for the game with the chessboard on the left and a panel on the right. The panel would contain the cards to sort, and you would use drag and drop to sort the cards. Once you submit your answer, the cards would then reveal which ones are correct and which are incorrect. We'd then also display some prominent information like the engine evaluation strength and the engine's overall rank for that move. That's it!

Our choice for the frontend framework was React + Vite + SWC + TypeScript. Our goal was a lightweight and fast website because it didn't really need much to work. Although, as a perfectionist, I did spend a lot of time polishing various aspects of the website.

We made some useful design and feature changes:

- Combine submit/next buttons into a single one.

- Add an action bar for useful actions.

- Add keyboard support for accessibility.

- Add move previews (selecting a card previews the move on the chessboard).

- Add hit counters (how often a game and how often a position was played).

- Support full responsiveness (for every viewport dimension imaginable).

- Support WebKit (yes, this has its own category, how special!).

Working on the frontend was relatively straightforward with the exception of supporting WebKit. I spent an insane amount of time debugging an SVG render bug for WebKit that caused my chess piece SVGs to turn black during some rerenders. You can see the reproduction in this PoC Codesandbox. This turned out to be an old bug present in WebKit, which was on the only iOS device I had to test with (my iPad). Updating my iPad to the latest iOS version resolved the issue. An important takeaway here is to always update your test devices!

Final Thoughts

Thank you for following along with the creation of Chessort! I hope you found the process as intriguing as I did. Now, it’s time for you to experience the game yourself. Head over to Chessort and start sorting those moves!

Additionally, if you're interested in the code behind Chessort, you can check out the open-source project on GitHub. Chessort is licensed under the GPL, and contributions are always welcome.

Happy sorting!